Call centers are unique relative to their ability to generate and analyze large data lakes, both structured and unstructured. Generally, a sample of the target universe is the most efficient approach for analyzing unstructured data (e.g., customer surveys, random call monitors, etc.) and assessing performance.

REQUIREMENTS FOR STATISTICAL VALIDITY

Sampling requires two conditions to generate statistically valid results:

- A random sample that eliminates bias associated with focused samples for which data sets are selected based on defined criteria, and

- Sufficient sample size to ensure the study is highly representative of the target universe being measured. In general, a random sample of 1,080 will yield 95% confidence with a margin of error of +/- 3% regardless of the total size of the universe being sampled.

QUALITY ASSURANCE SAMPLE SIZE CHALLENGES

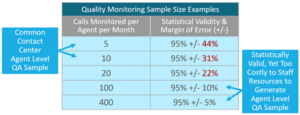

Where do call centers rely most often on a sampling approach to measure performance? Unfortunately, sampling occurs most often in the archaic process referred to as Quality Assurance, the monitoring of a small number of calls to rate an agent’s performance. When evaluating call quality, call centers have been trained by software vendors to think small – to pursue statistically invalid, random samples for the purpose of generating scorecard metrics at the agent level. While the approach meets the random sample requirements, it violates the second requirement for sufficient sample size.

Not only are the sample sizes captured by call centers insufficient to generate valid results, they also are a very expensive and subjective approach to measuring performance. Whether monitoring is conducted by supervisors or by a dedicated quality assurance (QA) team, the time required to obtain a statistically valid sample is cost prohibitive. Based on the information provided in the chart above, what would be the cost for your organization to deploy sufficient resources to accurately assess every agent’s performance using a statistically valid sampling approach to monitoring?

Not only are the sample sizes captured by call centers insufficient to generate valid results, they also are a very expensive and subjective approach to measuring performance. Whether monitoring is conducted by supervisors or by a dedicated quality assurance (QA) team, the time required to obtain a statistically valid sample is cost prohibitive. Based on the information provided in the chart above, what would be the cost for your organization to deploy sufficient resources to accurately assess every agent’s performance using a statistically valid sampling approach to monitoring?

Even if you were to use a rolling two or three-month average to achieve statistical validity, the costs remain excessive relative to the value delivered. Many of our clients have abandoned scoring calls as they realize the scores are not correlated to customer sentiment (CSAT or NPS). Instead, they use a variety of approaches to generate actionable insights for addressing the root cause of performance gaps.

SPEECH ANALYTICS AS A QA TOOL

Why do some call centers still use this outdated and statistically flawed approach? Because software vendors continue to cultivate the market for their monitoring/scoring QA software as part of a broader workforce optimization suite. However, even as they promote this traditional approach, vendors have begun to offer speech analytics for QA purposes. Knowing the sample size issue, they have positioned speech analytics as an opportunity to capture and score 100% of the calls through automation. While speech analytics can identify specific words utilized during a call and thus measure compliance, findings are based on data transcription and data analytics, which may miss critical nuances and subtleties of a call. McIntosh believes speech analytics is a powerful performance management tool for root cause analysis as supervisors can develop queries to quickly identify call segments for additional monitoring. The additional monitoring, conducted by trained personnel, will go beyond compliance and sentiment monitoring to gauge the effectiveness or efficiency of an agent’s skills.

OPTIMIZING CALL MONITORING RESOURCES

The human ear remains a valuable tool for assessing and addressing agent performance…not through the scoring of inadequate sample sizes, but rather through focused monitoring that identifies an agent’s knowledge and skill gaps. Scores are no longer relevant and are replaced by actionable performance insights. Gone too are behavioral checklists, and in their place, customized tools that address each call center’s unique environment affording the supervisor or QA the opportunity to identify root causes of performance failures. Instead of targeting 5 or 10 calls to observe each month, McIntosh recommends observing calls or call segments (portions of calls, ideally identified by speech analytics) until we recognize patterns of behavior that are destructive to effective and efficient call handling. Once uncovered, these behaviors can be addressed through coaching and training.

For additional detail on McIntosh’s recommended call monitoring methodology, please see our Tip Sheet: Call Segment Monitoring, which can also be found on our website. To arrange a complimentary discussion regarding your QA program’s sample size and approach, please email Beverly McIntosh at bmcintosh@mcintoshassociates.com.